How to Run DeepSeek Locally Guide for Developers

Introduction

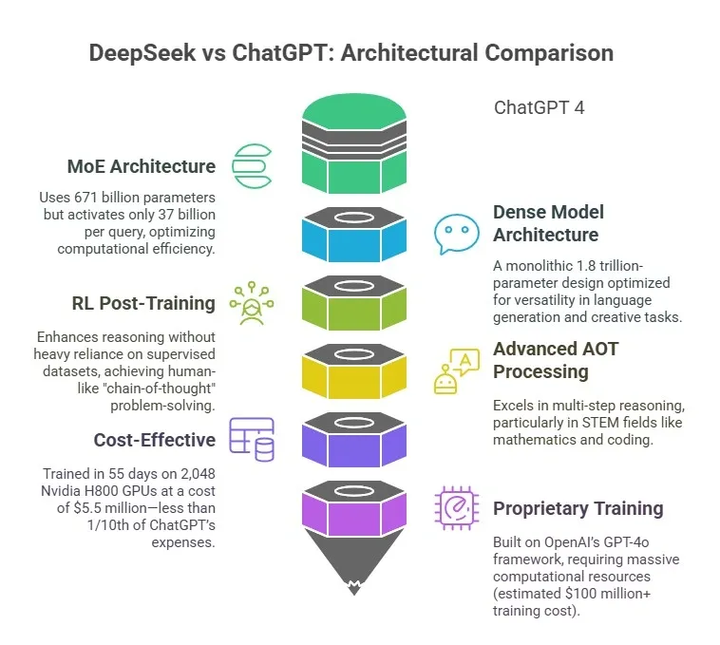

DeepSeek-V3 is a top-tier Mixture-of-Experts (MoE) language model, known for its excellent skills in understanding language and solving problems. Running DeepSeek-V3 on your own computer can greatly aid in research, development, and testing new ideas. This guide will show you how to install DeepSeek-V3, solve common problems, and make it run better.

Prerequisites

Before you begin, make sure your computer meets the following requirements:

- Operating System: It’s best to use Linux, but Windows can work with some extra steps.

- Python Version: You need Python 3.10 or newer.

- Hardware: A suitable GPU, such as an NVIDIA H800, is recommended for better performance.

- Dependencies: You must have CMake and Triton installed for efficient operation.

Step 1: Download DeepSeek-V3

First, get the DeepSeek-V3 files from GitHub by running these commands:

1 | git clone https://github.com/deepseek-ai/DeepSeek-V3.git |

Step 2: Install Necessary Packages

Next, install the required Python packages using the requirements.txt file:

1 | pip install -r requirements.txt |

If you face problems with Triton, especially on Windows, follow these steps:

- Download the correct Triton

.whlfile from this site. - Install Triton manually:

1 | pip install triton-3.0.0-cp311-cp311-win_amd64.whl |

Step 3: Resolve Installation Issues

Common problems you may encounter include:

- Triton Compatibility: Ensure the Triton version matches your Python version; for instance, use Triton 3.0 for Python 3.11.

- PyEE Errors: If issues arise with PyEE, uninstall and reinstall it:

1 | pip uninstall pyee |

- Torch Version Mismatch: Ensure your Torch version is compatible with your system.

Step 4: Set Up and Run DeepSeek-V3

Once dependencies are installed, configure the model:

- Download the model weights from Hugging Face:

1 | # Use the appropriate model link |

- Run the inference script:

1 | python inference.py --model_path ./model_weights.bin |

Step 5: Improve Performance

To enhance performance, consider these optimizations:

- FP8 Mixed Precision Training: This method saves memory and speeds up processing.

- Load Balancing: Utilize DeepSeek’s strategy to handle loads effectively without losing performance.

- Multi-Token Prediction: Activate speculative decoding for faster results.

Troubleshooting Tips

- Timeout Errors: Ensure your internet connection is stable when downloading large files.

- GPU Memory Issues: Adjust the batch size or use gradient checkpointing to manage memory effectively.

- Language Inconsistency: Fine-tune the model for tasks in languages other than English.

Conclusion

Running DeepSeek-V3 on your computer opens up many possibilities for developers and researchers. This guide provides the steps to set up DeepSeek-V3, fix common issues, and optimize it for your needs. Whether you’re working on language processing, code generation, or mathematical reasoning, DeepSeek-V3 offers powerful tools for your projects.

For further instructions, explore the official DeepSeek-V3 GitHub repository.